What are the different file-types used in Terraform? #

.tf– Define Infrastructure Using HCL:- Most common file type used for defining providers, resources, variables, outputs, locals, and modules

- Example:

main.tf,variables.tf,outputs.tf - All

.tffiles in a folder are merged automatically

.tf.json– Machine-Readable Configuration Format:- Same as

.tfbut written in JSON — useful for tools that generate Terraform code programmatically - Rarely used manually by humans

- Same as

.tfvars– Pass External Input Values:- Used to assign values to variables declared in

.tffiles - Helps customize configurations for different environments

- Example:

terraform.tfvars(default),dev.tfvars terraform apply -var-file=dev.tfvars

- Used to assign values to variables declared in

terraform.tfstate– Track Real Infrastructure:- Stores the current known state of managed infrastructure

- Should be stored securely (use remote backends)

terraform.tfstate.backup:- Backup copy of the previous state to help recover from accidental changes or failures

.terraform.lock.hcl– Dependency Lock File:- Records the exact versions of Terraform providers used in the current configuration

- Ensures consistent runs across machines and teams

- Automatically created and updated by Terraform during

init - Should be committed to version control

.terraform/– Hidden Directory:- Stores cached provider plugins and module metadata

- Created by

terraform init - Can be deleted safely (Terraform will recreate it)

- Do NOT version control

# This file is maintained automatically by "terraform init".

# Manual edits may be lost in future updates.

provider "registry.terraform.io/hashicorp/aws" {

version = "5.61.0"

constraints = "SOME_VALUE"

hashes = [

"HASHES",

"HASHES",

]

}Can you recommended a Terraform Project File Structure? #

terraform-project/

├── main.tf # Primary configuration file defining resources

├── providers.tf # Provider declarations (e.g AWS, Azure etc..)

├── backend.tf # State Backend configuration (e.g Amazon S3, ..)

├── data.tf # Data declarations

├── variables.tf # Variable declarations

├── outputs.tf # Output definitions

├── locals.tf # To define local values

├── terraform.tfvars # Variable values

├── README.md # Documentation- Understanding Need for Organized Terraform Projects: As infrastructure grows, unorganized code becomes hard to maintain, reuse, or debug — a clean file structure improves readability, modularity, and team collaboration

main.tf– Core Resource Definitions: Contains the main infrastructure resources (e.g., compute, storage, networking)variables.tf– Input Variables: Declares allvariableblocks used in the project, with optional types and defaultsoutputs.tf– Output Values: Defines values (like IDs, IPs) to be shared or referenced externallyprovider.tf– Provider Configuration: Configures providers like AWS, Azure, Google Cloud, and authentication settingslocals.tf– Local Values and Reusable Expressions: Stores constants or calculated valuesterraform.tfvars– Input Variable Assignments: Supplies values for variables declared invariables.tf(e.g., environment-specific values)backend.tf– Remote Backend Configuration: Configures state storage in remote services like S3- (Best Practice) Keep Each File Focused: Separating concerns makes maintenance easier

What are Terraform Modules? #

- Understanding Need for Reusability in Infrastructure Code: Writing the same Terraform code for VMs, VPCs, or databases across multiple projects leads to duplication and inconsistency

- What Are Terraform Modules: A collection of

.tffiles grouped together to perform a specific task — like provisioning a server or setting up a network — which can be reused across different Terraform configurations - Helps Simplify, Organize, and Reuse Infrastructure Code: Modules allow you to break down large configurations and promote consistency across environments

- Reuse Common Infrastructure Patterns:

- Create a reusable module for VPC setup or EC2 instances

- Use the same module in different applications (with different inputs)

- Separate Logic for Better Maintainability:

- Break complex configs into smaller, focused modules (e.g.,

network,database,compute)

- Break complex configs into smaller, focused modules (e.g.,

- Share Infrastructure Configuration Across Teams:

- Teams can publish modules to Terraform Registry or internal repositories for reuse

- Accepts Inputs and Produces Outputs: Use

variableandoutputblocks inside the module to customize behavior and return values - Supports Local and Remote Sources: Modules can be stored locally (

./modules/vpc) or remotely (GitHub, Terraform Registry)

# Let's create a User Module

# Purpose: To create IAM users for your application

# Input: Name of the application

# Output: Details of the created IAM user (e.g., name, ARN)

# PROJECT STRUTURE

# terraform-project/

# ├── app-a/

# │ └── main.tf # Uses the IAM user module for app-a

# │

# ├── app-b/

# │ └── main.tf # Uses the IAM user module for app-b

# │

# ├── modules/

# │ └── users/

# │ ├── main.tf # Defines IAM user resource

# │ ├── outputs.tf # Outputs full IAM user details

# │ └── README.md # Module documentation (optional)

# │

# └── README.md # Project-level documentation (optional)

# ✅ BENEFITS OF THIS STRUCTURE

# - Reusable IAM user logic in users module

# - Easy to re-use across apps or projects

# - Clean separation between logic and usage

# ---------------------------------------------

# 📁 modules/users/main.tf

variable "application" {

default = "default"

}

provider "aws" {

region = "us-east-1"

}

# This can be a complex resource

# Network or EC2 Instance or ..

resource "aws_iam_user" "my_iam_user" {

name = ""iam_user_for_${var.application}_application"

}

# ---------------------------------------------

# 📤 OUTPUT BLOCK

# 📁 modules/users/outputs.tf

output "my_iam_user_complete_details" {

value = aws_iam_user.my_iam_user

}

# ---------------------------------------------

# 🚀 MODULE USAGE - APP A

# 📁 app-a/main.tf

# WHAT: Uses the IAM user module

# WHY: Creates user specific to "app-a"

module "user_module" {

source = "../modules/users"

application = "app-a"

}

# OTHER app-a RESOURCES GO HERE

# ---------------------------------------------

# 🚀 MODULE USAGE - APP B

# 📁 app-b/main.tf

# WHAT: Uses the same module

# WHY: Creates user specific to "app-b"

module "user_module" {

source = "../modules/users"

application = "app-b"

}

# OTHER app-b RESOURCES GO HERE

DIFFERENT TYPES OF MODULES

# PROJECT STRUTURE

# terraform-project/

# ├── main.tf

# ├── variables.tf

# ├── provider.tf

# ├── others.tf

# ├── modules/

# │ └── vpc/

# │ ├── main.tf

# │ ├── outputs.tf

# │ └── README.md

# │ └── ec2/

# │ ├── main.tf

# │ ├── outputs.tf

# │ └── README.md

# │ └── rds/

# │ ├── main.tf

# │ ├── outputs.tf

# │ └── README.md

# │

# └── README.md- Root Module: The module defined in the root of the Terraform configuration directory where you run Terraform commands

- Child Modules (Local Modules): Modules stored locally in your project directory, reusable across configurations

- Registry Modules: Published and versioned modules hosted on public or private registries, like the official Terraform Registry

- Remote Modules: Modules fetched from remote sources like GitHub, ..

Example:

module "vpc" {

source = "./modules/vpc" # Local module

}

module "network" {

source = "terraform-aws-modules/vpc/aws" # Registry module

version = "3.11.0"

}

module "custom" {

source = "git::https://github.com/org/repo.git//modules/custom"

}What is the need for Terraform Workspaces? #

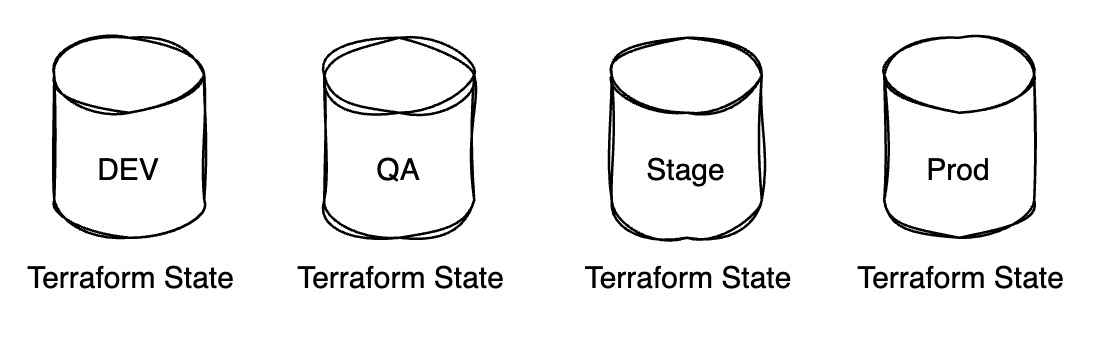

- Understanding Need to Manage State in Multiple Environments: Dev, QA, and Prod environments often share the same Terraform code but require different state files

- Terraform Workspace: A named instance of Terraform’s state file that allows you to use the same configuration to manage multiple environments with isolated state

- Keeps Separate State Files for Each Workspace: Stored under

.terraform/or managed by the remote backend - Manage Dev, QA, Stage and Prod with Same Code: Use different workspaces to provision similar infrastructure across environments

- Test Infrastructure Changes Safely: Try new changes in a test workspace without affecting production

- Create a New Workspace:

terraform workspace new dev– Creates and switches to a new workspace nameddev - List All Workspaces:

terraform workspace list– Shows all existing workspaces; current one is marked with* - Select an Existing Workspace:

terraform workspace select prod– Switches to theprodworkspace - Delete a Workspace:

terraform workspace delete test– Deletes thetestworkspace (you must switch out of it first) - Show Current Workspace:

terraform workspace show– Displays the name of the currently active workspace - Use Workspaces for Lightweight Multi-Env Setups: Perfect for small-to-medium projects that want to avoid repetition but still need environment isolation

Explain a Sample Step By Step Workflow of Using Two Workspaces #

- Create Two Workspaces – Dev and QA

terraform workspace new dev– Creates and switches todevworkspaceterraform workspace new qa– Creates and switches toqaworkspace

- Switch to Dev Workspace

terraform workspace select dev– Activate thedevworkspace- Run

terraform planandterraform applyto provision resources for Dev

- Switch to QA Workspace

terraform workspace select qa– Switch to theqaworkspace- Run

terraform planandterraform applyto create separate QA infrastructure

- Verify Workspace-Specific State

- Each workspace has its own isolated

terraform.tfstate - Resources in

devandqaare managed independently even though they share the same.tfconfiguration

- Each workspace has its own isolated

- Destroy QA Resources Without Affecting Dev

terraform workspace select qaterraform destroy– Only deletes QA infrastructure- Dev resources remain untouched in their own workspace

- (Best Practice) Use Workspaces + Variable Files

- Example:

terraform apply -var-file=dev.tfvarsindev, andqa.tfvarsinqa - Ensures proper configuration per environment while keeping code consistent

- Example:

When do you use Terraform Enterprise? #

- What is Terraform Enterprise: A self-hosted or SaaS offering from HashiCorp that provides additional features for teams using Terraform at scale

- Manage Large Teams and Projects at Scale: Offers scalable architecture with workspaces, organizations, and user management

- Role-Based Access Control (RBAC): Manage who can view, plan, apply, or modify infrastructure across workspaces and teams

- Policy Enforcement: Enforce compliance rules (e.g., no public S3 buckets, region restrictions) before changes are applied

- Private Module Registry: Share internal modules securely across teams

- Remote Operations with Secure State Management: Avoid local state files with encrypted, versioned remote backends

- If You Need an Internal, Secure Deployment: Use Self-hosted Terraform Enterprise

Explain a Few Terraform Best Practices #

- Automate with CI/CD: Integrate Terraform with CI/CD pipelines to automate validation, planning, and application of infrastructure changes

- Use Version Pinning for Providers and Terraform: Lock specific versions to avoid unexpected breaking changes

- Organize Code into Modules: Break infrastructure into reusable, well-scoped modules for better maintainability

- Use Remote Backends with Locking: Store state files in a remote backend with locking and encryption

- Use terraform workspace for Multiple Environments:Isolate dev/staging/prod environments using named workspaces or separate state files and directories

- Implement Resource Tagging: Apply consistent tags (e.g., Environment, Owner, Application) to all resources for better tracking, cost visibility, and compliance

- Avoid Hardcoding – Use Variables and

tfvarsFiles: Keep your code flexible and environment-agnostic by using input variables and separate value files for each environment - Never Commit State Files or Secrets to Git: Add

terraform.tfstateand.terraform/to.gitignoreto protect your credentials - Limit Use of provisioners: Avoid provisioner blocks when possible — they’re considered a last resort. Prefer configuration management tools.

What are Immutable Servers? #

1. The Problem with Mutable Servers (Traditional Approach): Traditionally, servers were provisioned once and then "tweaked" over time. If you needed to make a change (e.g., install new software, apply a security patch, update a configuration file):

- You would log into the existing server

- Execute a script or manual commands to apply the changes

This approach creates several problems:

- Configuration Drift: The actual state of the server deviates from its original, documented configuration. Different servers, even those intended to be identical, can gradually accumulate unique configurations and issues.

- Reproducibility Challenges: If you need to create a new server with the exact same updated configuration, you might have to manually re-run a sequence of undocumented or lost scripts, which is prone to errors.

- Debugging Difficulty: It becomes hard to trace exactly what changes were applied over time, complicating debugging.

2. The Solution: Immutable Servers with Infrastructure as Code: Immutable servers are servers that, once provisioned, are never modified. If any change is needed, a new server is provisioned with the updated configuration, and the old server is decommissioned.

- Process:

- Your Infrastructure as Code (e.g., Terraform) configuration is updated with the desired changes (e.g., a new software version, a security patch)

- A new server (or set of servers) is provisioned from this updated configuration

- Once the new server(s) are fully operational and verified, traffic is redirected to them

- The old server(s) are then decommissioned and destroyed

3. Advantages of Immutable Servers:

- Consistency and Reproducibility: Since servers are always created from the latest version-controlled configuration, there is no configuration drift. Every server is identical to others provisioned from the same code, ensuring consistency across environments (development, staging, production).

- Reliability: Building new servers from scratch ensures that all dependencies and configurations are correctly applied every time. This reduces the risk of hidden issues from incremental changes.

- Simplified Rollbacks: If a new server configuration has issues, you can quickly roll back by simply redirecting traffic to the previous version's servers (if still available) or destroying the new servers and provisioning from a previous, known-good configuration.

- Easier Debugging: If a problem occurs, you know exactly what configuration was used to build the server, simplifying debugging.

- Reduced Operational Complexity: Operations teams manage a consistent, repeatable build process rather than tracking individual server states.