What is Terraform? #

- Understanding Need for Infrastructure Automation: Manually creating servers, networks, databases, and permissions for every environment (Dev, QA, Prod) is slow and error-prone

- Terraform: An open-source tool that lets you define and provision cloud infrastructure using simple, declarative configuration files

- Solves for Repeatability and Consistency: Infrastructure is defined as code and can be version-controlled, reviewed, and reused across teams and environments

- Eliminates Manual Infrastructure Setup: Automates the provisioning of servers, databases, and networks, reducing manual errors and delays

- Works Across Cloud Providers and Services: Supports AWS, Azure, Google Cloud, Kubernetes, GitHub, and more using the same tool and very similar syntax

- Enables Version Control for Infrastructure: Stores infrastructure as code in Git, allowing tracking of changes

- Improves Team Collaboration and Workflow: Infrastructure changes can be reviewed, tested, and approved just like application code

- Integrates Easily with CI/CD Pipelines: Enables automatic infrastructure updates as part of your DevOps workflows

- Reduces Cloud Costs Through Automation: Automatically tears down unused infrastructure without missing any resource!

When should you use and when should you NOT use Terraform? #

-

When should you NOT use Terraform?

- When You Need Fine-Grained Configuration Management: Tools like Ansible, Chef, or Puppet are better suited for managing OS configurations, package installations, and runtime processes

- When Your Infrastructure is Small and Rarely Changes: For very simple setups with minimal updates, Terraform may be overkill and add unnecessary complexity

- When You Prefer GUI-Based Provisioning: If your team is non-technical or prefers clicking through AWS/Azure/Google Cloud consoles, Terraform’s code-first approach may not be ideal

- When You Want Quick One-Time Changes: For quick, temporary changes, using the cloud provider console or CLI may be faster than writing and applying Terraform code

- When Your Team Lacks Git Practices: Terraform works best when used with version control workflows — without them, it might not work well

-

When should you use Terraform?

- When You Want to Automate Complex Cloud Infrastructure: Ideal for setting up VMs, databases, networks, and security groups automatically instead of doing it manually

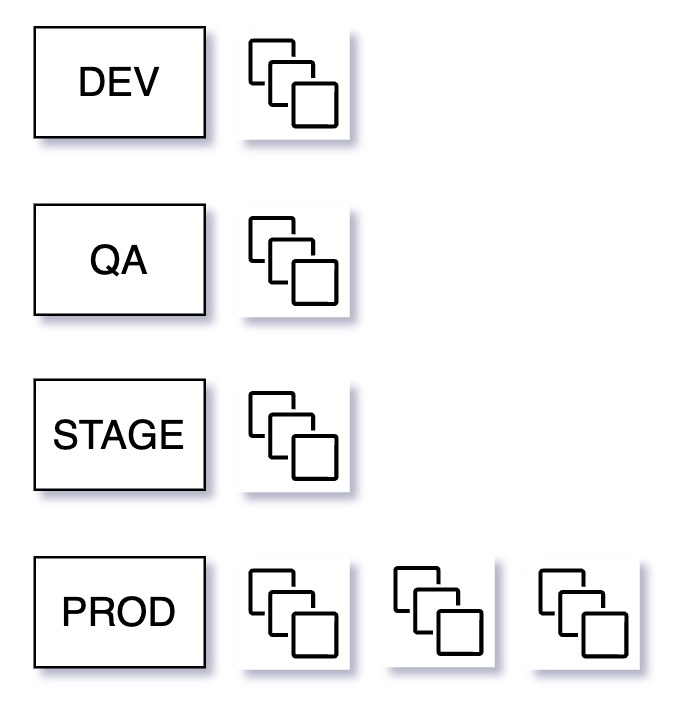

- When You Need Repeatable Environments: Useful for creating identical Dev, QA, and Prod environments to reduce environment-specific problems

- When You’re Managing Multi-Cloud or Hybrid Cloud: Works across AWS, Azure, Google Cloud, and on-premise — great for teams using more than one platform

- When Your Team Wants to Use Infrastructure as Code (IaC): Allows storing infrastructure definitions in Git for better collaboration, review, and rollback

- When You Want to Integrate Infrastructure into CI/CD Pipelines: Terraform fits well into automated pipelines for faster and safer deployments

- When You’re Adopting Immutable Infrastructure Practices: Makes it easier to recreate infrastructure from scratch instead of patching it manually

How does Terraform compare to other tools that help with Infrastructure as Code? #

- AWS CloudFormation: Native to AWS; allows defining infrastructure using JSON/YAML. Best for deep AWS integrations but lacks multi-cloud flexibility

- Azure Resource Manager (ARM) Templates / Bicep: Native IaC tools for Azure; Bicep improves readability over ARM’s verbose JSON. Best for Azure-specific projects.

- Google Cloud Deployment Manager: Google Cloud-native tool using YAML and Jinja2 templates. Suitable for Google Cloud-only setups but limited outside Google Cloud.

- Pulumi (Code-Driven IaC): Allows writing infrastructure using familiar languages like Python, JavaScript, TypeScript, Go. Best for developers who want to automate IaC using a familiar programming language. (No need to learn HCL!)

- Ansible: Primarily for configuration management (configuring and managing applications, processes and files on existing servers) BUT can also create infrastructure (using cloud modules - not recommended!)

- Chef / Puppet: Focused on configuring and managing servers after they’re provisioned. Less commonly used for full infrastructure provisioning.

Compare Terraform vs Ansible #

| Feature | Terraform | Ansible |

|---|---|---|

| Purpose | Infrastructure provisioning and management | Configuration management and application deployment |

| Type | Declarative – Describe desired state. Terraform would compare and apply changes. | Primarily imperative – runs step-by-step tasks |

| Primary Use | Creating, modifying, and deleting cloud resources | Configuring and managing applications on existing servers |

| Mostly Used Language/Format | HashiCorp Configuration Language (HCL) | YAML-based playbooks |

| State Management | Maintains a state file to track infrastructure changes | Stateless by default |

| Provisioning Speed | Efficient for creating and managing infrastructure at scale | Effective for configuration but less efficient for provisioning |

| Infrastructure Focus | Best suited for provisioning infra on cloud providers like AWS, Azure, Google Cloud | Can do configuration management on both cloud and on-premises infrastructure |

| Configuration Management | Limited configuration management capabilities | Excellent for configuration management - configuring apps, services, files, OS on existing servers |

| My Recommendations | Use Terraform for Provisioning | Ansible for Configuration Management |

How can you use Terraform to create resources? #

- (Step 1) Write HCL Configuration Files: Use

.tffiles to define infrastructure using HashiCorp Configuration Language - (Step 2) Initialize the Terraform Project: Run

terraform initto download necessary provider plugins and set up the local working directory - (Step 3) Validate the Configuration: Use

terraform validateto check for syntax errors or misconfigurations before any action is taken - (Step 4) Preview Infrastructure Changes: Run

terraform planto see what Terraform intends to create, update, or destroy — helps prevent surprises - (Step 5) Apply the Configuration to Create Resources: Run

terraform applyto actually provision the defined infrastructure after reviewing the plan - (Step 6) Make Changes and Reapply: Modify

.tffiles when requirements change, and repeat theplan→applysteps to update infrastructure - (Step 7) Optional Cleanup with Destroy: Use

terraform destroyto tear down all infrastructure - Declarative and Predictable: You define the desired outcome — Terraform figures out the how to make it actual!

# AWS Provider Configuration

# WHAT: Defines which cloud provider to use.

# WHY: Needed for Terraform to know how to create

# resources

provider "aws" {

region = "us-east-1" # Choose AWS region

}

# S3 Bucket Resource Configuration

# WHAT: Creates an AWS S3 bucket

# WHY: To store data/objects in AWS (like backups,

# static files, logs etc.)

resource "aws_s3_bucket" "my_s3_bucket" {

bucket = "my-s3-bucket-in28minutes-rangake-002"

# Bucket name must be globally unique

versioning {

enabled = true

# Enables object versioning to keep history

}

# tags = {

# project = "my-first-terraform-project"

# }

}

# IAM User Resource Configuration

# WHAT: Creates a new IAM user in AWS

resource "aws_iam_user" "my_iam_user" {

name = "my-iam-user"

}

Why is Terraform called a Declarative Tool? #

- How Terraform Works: Terraform uses a declarative approach to define infrastructure as code, compare it with the real-world state, and apply only the necessary changes to match the desired outcome

- Avoids Step-by-Step Instructions: You describe what you want, not how to get there — Terraform figures out the steps

- (Declarative) Define Desired State Using HCL: You write

.tffiles to describe the end result (e.g., an EC2 instance should exist with a certain type and AMI) - (Plan) Terraform Creates an Execution Plan: It compares your configuration with the current infrastructure state and shows what it will create, update, or delete

- (Apply) Executes Only the Required Changes: Terraform applies the minimal set of actions needed to bring real infrastructure in sync with your code

- (State) Keeps Track of Resources in a State File: Maintains a snapshot of managed resources for future comparisons and updates

- (Key Difference: Imperative vs Declarative):

- Imperative (e.g., Bash, Ansible): You tell the system exactly how to do each step (e.g., create subnet → then launch VM)

- Declarative (Terraform): You declare the final outcome, and the tool decides the best way to achieve it

- (Advantage) Safer and More Predictable Changes: Declarative logic avoids manual sequencing and reduces errors

- Execution Plan Visibility: Terraform provides a detailed preview of all actions (create, update, delete) before they’re applied - This lets you catch unintended consequences (like deleting a production resource or altering a critical security group) before execution

- Idempotent Behavior: Running the same configuration multiple times yields the same result, with no additional changes — Terraform won’t recreate or modify resources that are already aligned with the desired state

- Minimizes Human Error Through Automation: Since Terraform computes resource dependencies and action ordering automatically, there’s no need to write imperative step-by-step logic — reducing the risk of mistakes during provisioning or teardown

- Makes Creating New Environments Safer: All changes are driven by the same version-controlled codebase. This ensures that every environment (dev, stage, prod) is built with the same structure and settings — making deployments consistent and repeatable

Explain a Few Commands That Are Frequently Used with Terraform #

terraform validate– Check for Syntax Errors Early: Verifies that the.tffiles are syntactically correctterraform fmt– Enforce Consistent Formatting: Automatically formats your Terraform files for readability and consistencyterraform init– Prepare the Project Directory: Initializes the working directory, downloads required provider plugins, and sets up the backend for storing terraform state if definedterraform plan– Preview Infrastructure Changes: Shows what Terraform will do — what resources will be added, changed, or destroyed — before you apply itterraform apply– Create or Update Resources: Executes the changes shown in the plan, provisioning or modifying cloud infrastructure as described in your codeterraform destroy– Tear Down Infrastructure: Removes all resources defined in the configuration — useful for cleaning up test environments or demos

What is the Terraform Plugin Architecture? #

- Understanding Need for Extensibility and Multi-Cloud Support: Infrastructure teams need to provision resources across AWS, Azure, Google Cloud, Kubernetes, and SaaS providers — Terraform must support all of them without bloating up the core tool

- What is Terraform Plugin Architecture: A modular system where Terraform’s core communicates with separate provider plugins to manage external infrastructure

- Core Binary is Lightweight and Generic: The

terraformCLI focuses on the core logic — it delegates all resource-specific tasks to plugins - Enables Flexible Integration with Different Platforms: Each provider knows how to talk to a specific platform (like AWS or GitHub)

- Providers Are Managed Externally: Each provider (e.g.,

hashicorp/aws,azure,google,kubernetes) is a separate binary downloaded duringterraform init - Build Your Own Custom Providers: Teams can build custom provider for internal platforms

- Keeps Terraform Core Clean and Scalable: New cloud services can be supported by updating or adding plugins — no need to change Terraform’s core

# 🌍 AWS Provider

# Used to create AWS infrastructure like EC2, S3

provider "aws" {

region = "us-east-1"

}

# ☁️ AzureRM Provider

# Used to manage Azure resources (VMs, Storage, etc)

provider "azurerm" {

features {}

# 'features' block is mandatory even if empty

}

# 🟦 Google Cloud Provider

# Manages Google Cloud resources like GCE, GCS, Cloud Run

provider "google" {

project = "my-Google Cloud-project"

region = "us-central1"

}

# 🐳 Docker Provider

# WHAT: Used to manage Docker containers/images

provider "docker" {

# Connects to local Docker daemon

}

# 🐙 GitHub Provider

# WHAT: Manage repos, teams, ect on GitHub

provider "github" {

token = var.github_token

owner = "in28minutes"

}

# ☸️ Kubernetes Provider

# WHAT: Manage K8s resources (pods, services, etc.)

# HOW: Connect using kubeconfig file

provider "kubernetes" {

config_path = "~/.kube/config"

}

# ✅ Notes

# - Add `required_providers` in `terraform {}` block

# to pin versions (recommended)

How Can Terraform Providers Authenticate to Cloud Platforms? #

Authentication Methods: Terraform providers must authenticate securely to cloud platforms to manage infrastructure

- Recommended methods: Using Environment Variables (ideal for CI/CD), Shared Credential Files (convenient for local development), or IAM Roles (best practice for automated environments in clouds)

- Hard-coding in Code is NOT Recommended: Hard-coding credentials directly in the provider block is strongly discouraged

# 🌍 METHOD 1: Environment Variables

# WHAT: Use OS-level variables to pass secrets

# WHEN: Recommended for CI/CD or local dev

# WHERE: Set in terminal or CI pipeline env config

# AWS EXAMPLE

# export AWS_ACCESS_KEY_ID="your-key"

# export AWS_SECRET_ACCESS_KEY="your-secret"

# export AWS_REGION="us-west-2"

provider "aws" {

# No credentials needed here if ENV vars are set

}

# 📂 METHOD 2: Shared Credential Files

# WHAT: Use provider CLI config files (e.g., AWS)

# WHY: Easy to use for local development

# Use "aws configure" to set the file up

provider "aws" {

region = "us-west-2"

shared_credentials_files = ["~/.aws/credentials"]

profile = "default"

}

# 👤 METHOD 3: IAM Roles / Service Principals

# WHAT: Assume identity from the running instance

# WHY: Best practice for running automation in cloud

# WHEN: Use inside EC2 or Cloud Shell in cloud

# AWS IAM ROLE ASSUMPTION

provider "aws" {

region = "us-west-2"

assume_role {

role_arn = "arn:aws:iam::123456789012:role/TerraformRole"

session_name = "terraform-session"

}

}

# 🛑 METHOD 4: Hardcoded in Provider Block (NOT RECOMMENDED)

# WHAT: Explicitly specify access credentials

# WHEN: Only for learning or quick testing

# AWS EXAMPLE (❌ Avoid in real projects)

provider "aws" {

region = "us-west-2"

access_key = "AKIAIOSFODNN7EXAMPLE"

secret_key = "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY"

}

# ✅ RECOMMENDED BEST PRACTICE

# - NEVER commit access keys in code