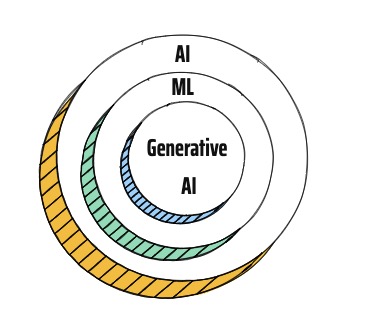

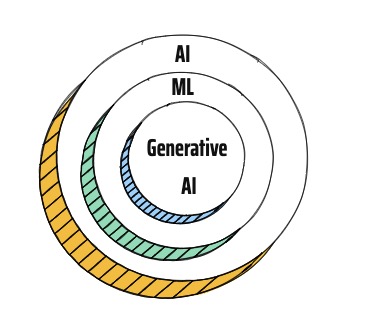

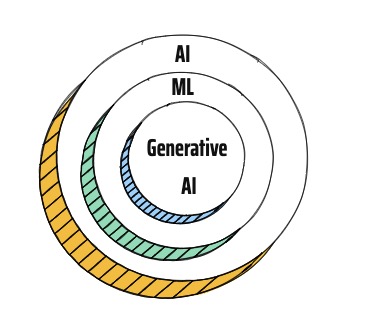

Compare AI vs ML #

What is Artificial Intelligence?

- Definition: A branch of computer science focused on building systems that can simulate human intelligence

- Goal: Enable machines to see, understand, learn, and make decisions

AI is All Around Us

- Self-driving Cars: Sense environment and make driving decisions

- Spam Filters: Detect and block unwanted emails

- Fraud Detection: Analyze behavior to flag suspicious transactions

- Recommendation Systems: Suggest music, movies, products based on past activity

- Email Sorting: Categorize emails into Primary, Promotions, and Social tabs

How is Traditional Programming done?

- Scenario: You want to predict house prices

- Traditional Programming: You write explicit rules — “IF location is X AND size is Y THEN price is Z”

How does Machine Learning work?

- What Happens?: ML system that learns from data instead of rules

- Machine Learning: Give examples → ML finds patterns → Predicts outcome

- Power: ML improves as more data is provided

How Machine Learning Works

- Step 1: Feed Data – Provide historical examples

- Step 2: Train Model – ML learns patterns in data

- Step 3: Predict – Use trained model to predict on new data

| Aspect | Artificial Intelligence (AI) | Machine Learning (ML) |

|---|---|---|

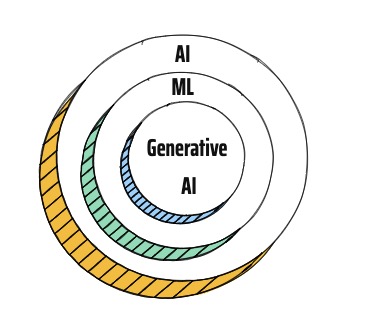

| Purpose | Simulate human intelligence | Enable machines to learn from data |

| Scope | Broad — includes reasoning, learning, and problem-solving | Narrower — focused mainly on learning from data |

| Relation | ML is a subset of AI | Part of AI |

| Data Dependency | May or may not use data | Strongly depends on large amounts of data |

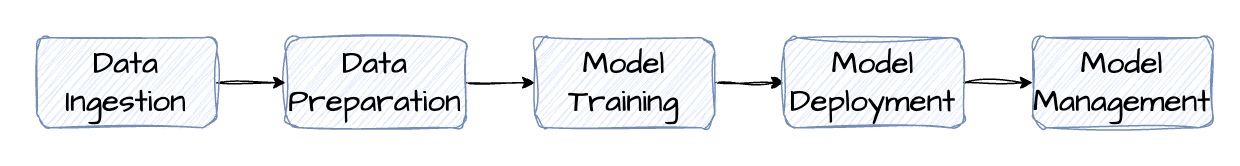

What are the Key Steps in Machine Learning Lifecycle? #

Step 1: Data Ingestion

- Collect data from real-world sources

- Examples: User clicks, sales records, logs, images, transaction systems, big data systems, ..

Step 2: Data Preparation

- Clean and transform data

- Examples: Remove duplicates, handle missing values, ..

Step 3: Model Training

- Use training data to build a prediction model

- Use frameworks like TensorFlow, PyTorch, scikit-learn

Step 4: Model Deployment

- Make model available to real-world systems

- Examples: Integrate model into mobile app or website

Step 5: Model Management

- Track model performance over time

- Actions: Retrain with new data

End-to-End Example: Spam Detection

- Ingest: Pull email history

- Prepare: Label spam vs not spam

- Train: Learn patterns from labeled data

- Deploy: Filter incoming emails

- Manage: Keep accuracy high as spam tactics evolve

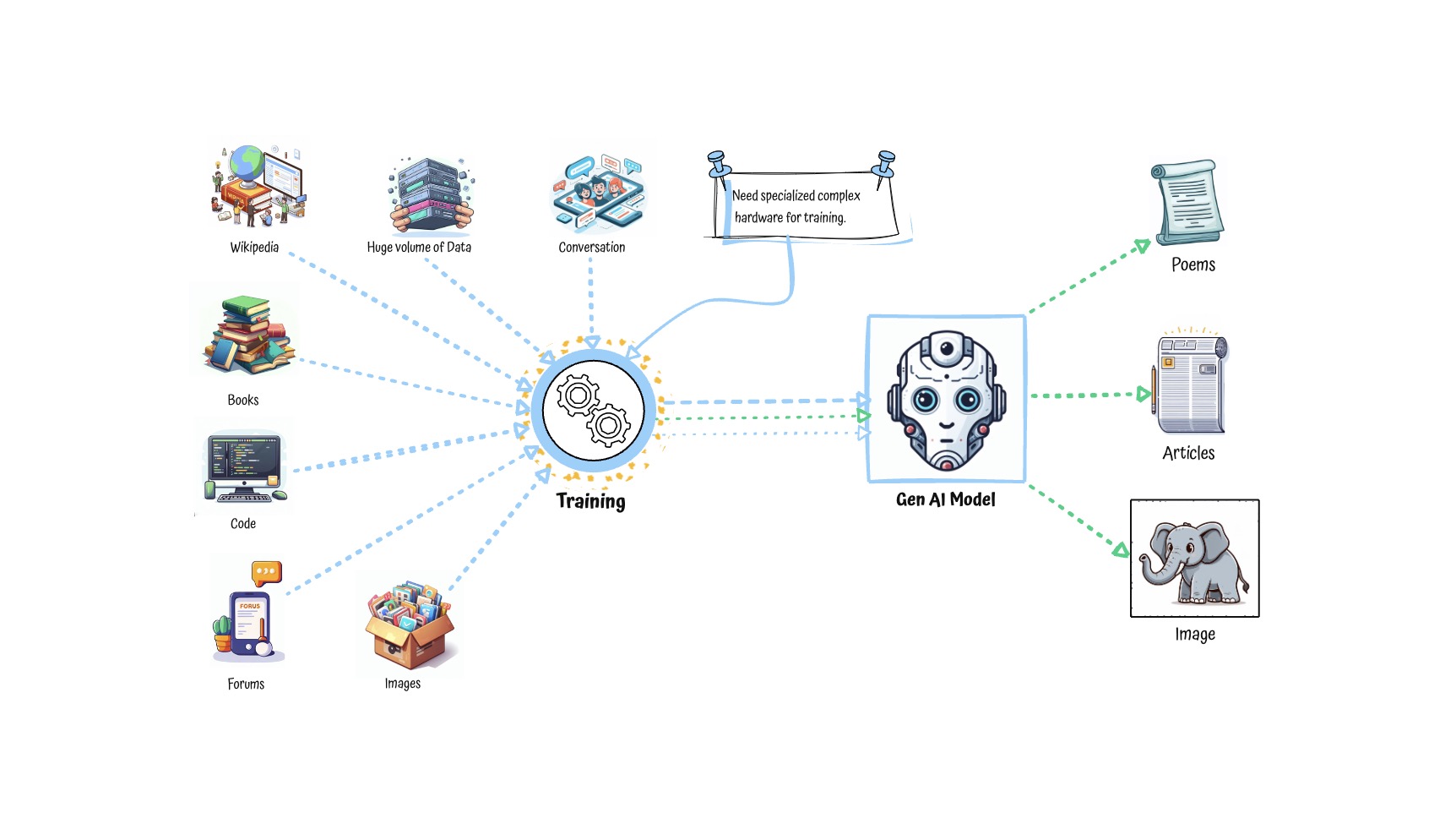

What is Generative AI? #

Why Generative AI?

- Scenario: Traditional ML models can detect spam or predict house prices. But what if we want the machine to write an email, generate an image, or create a video?

- Generative AI: A special kind of AI that creates new content like text, code, images, and audio — not just make predictions.

- Definition: A subset of Machine Learning that creates original content from patterns it learned

- Goal: Move from consuming data to generating data

- Examples: Writing poems, answering questions, generating software code, creating art

How is Generative AI Different from Traditional ML?

| Aspect | Generative AI | Traditional ML |

|---|---|---|

| Purpose | Create new content (text, images, code, etc.) | Make predictions or classifications |

| Output Type | Original and creative outputs | Structured outputs like labels or numbers |

| Examples | ChatGPT, DALL·E, GitHub Copilot | Fraud detection, stock price prediction |

| Training Data | Requires massive datasets and compute power | Can work with smaller, structured datasets |

| Use Cases | Content generation, summarization, coding help | Forecasting, classification, .. |

How does Generative AI Work?

- Foundation Models Power Generative AI

- Definition: Huge pre-trained models trained on broad data (text, code, images)

- Reusable: One model can handle many tasks (chat, translate, summarize)

- Customizable: Many apps fine-tune foundation models for their specific needs

- Training Foundation Models is Complex

- Massive Datasets: Learn from books, code, web pages, image captions

- Expensive Training: Needs GPUs, TPUs, distributed storage

- Takes Time: Training can take weeks or months, depending on size

- Key Limitations of Foundation Models

- Data Dependency: Garbage in, garbage out

- Model quality depends on training data

- If trained on biased or outdated info, it will produce flawed results

- Knowledge Cutoff: Models freeze in time

- Cannot access real-time data

- Cannot tell you what happened after their training period

- Hallucinations: Confident but incorrect responses

- May generate fake facts, URLs, citations, or statistics

- Bias: Inherits bias from training data

- Can unintentionally favor certain groups over others

- Edge Cases: Uncommon inputs often fail

- Models may behave unexpectedly with unusual or rare queries

- Data Dependency: Garbage in, garbage out

- Reducing Hallucinations with Grounding

- Grounding: Link AI outputs to verified data

- Example: Connect chatbot to trusted company documents

- Benefits:

- Reduces hallucinations

- Provides sources

- Popular Technique – RAG (Retrieval-Augmented Generation)

- Step 1: Search trusted documents

- Step 2: Add findings to the prompt

- Step 3: Let model generate based on that data

- Grounding: Link AI outputs to verified data

How did the ML landscape change after Generative AI? #

ML Landscape Before Generative AI

- 3 Typical Approaches: Prebuilt ML APIs, AutoML tools, or full custom models

- ML APIs: Use ready-made APIs for text, speech, image, or video tasks

- Use Case: Convert customer calls to text using Speech-to-Text API

- Use Case: Detect objects in product images using Vision API

- Use Case: Classify customer reviews as positive or negative

- AutoML: Train custom models without ML expertise by uploading labeled data — platform handles training and deployment

- Use Case: Classify support tickets into categories using labeled examples

- Use Case: Categorize pet images (e.g., dogs vs cats) by uploading a labeled dataset

- Custom Models: Full control for ML teams using frameworks like TensorFlow, PyTorch, scikit-learn — bring your own data and code

- Use Case: Build a custom model to forecast daily sales using historical data

- Use Case: Train a deep learning model for detecting anomalies in financial transactions

Generative AI Brought in New Capabilities

- Foundation Models Introduced: Pre-trained on massive datasets to generate text, code, audio, and images

- Use Case: Generate product descriptions automatically for e-commerce listings

- Use Case: Write code snippets based on natural language prompts

- New Capabilities: Summarization, chat, translation, and content generation via simple APIs

- Use Case: Summarize long customer feedback into short highlights

- Use Case: Translate product manuals into multiple languages

- Models Can Be Tuned Easily: Foundation models can be fine-tuned with your own data to adjust tone, context, or behavior

- Use Case: Fine-tune a chatbot to use your company’s tone and answer product-specific questions

ML Landscape After Generative AI

- Use Pretrained APIs (NO CHANGE) — Use ready-made APIs: text, speech, image, or video tasks

- Use Foundation Models via API (NEW) — New capabilities: chat, summarize, translate, generate code

- Use Case: Summarize long customer feedback into short highlights

- Customize Foundation Models (NEW) — fine-tune on your own data

- Use Case: Fine-tune a chatbot to use your company’s tone and answer product-specific questions

- Train with AutoML (NO CHANGE) — build models without writing code

- Use Case: Categorize pet images (e.g., dogs vs cats) by uploading a labeled dataset

- Build Custom Models (NO CHANGE) — use your own infra and tools if full control is needed

- Use Case: Train a deep learning model for detecting anomalies in financial transactions

Key Shift After GenAI

- Platforms now support both traditional ML and GenAI paths

Managed Services In Different Cloud Platforms for AI and ML #

| Category | Description | AWS | Azure | Google Cloud |

|---|---|---|---|---|

| Use Pretrained APIs | Use ready-made APIs: text, speech, image, or video tasks | Amazon Rekognition (image & video analysis) Amazon Comprehend (text analytics),.. |

Azure AI Vision (image analysis) Azure AI Language (text analysis), .. |

Google Cloud Vision AI (image analysis) Google Cloud Natural Language AI (text analysis),.. |

| Use Foundation Models via API | New capabilities: chat, summarize, translate, generate code | Amazon Bedrock | Azure AI Foundry | Vertex AI Model Garden |

| Customize Foundation Models | Fine-tune foundation models on your own data (cloud-specific & open-source models such as Anthropic Claude, Meta Llama, & Mistral) | Amazon Bedrock | Azure AI Foundry | Vertex AI Model Garden |

| Train with AutoML | Build models without writing code | Amazon SageMaker Autopilot | Azure Machine Learning Automated ML | Google Cloud AutoML |

| Build Custom Models | Platforms for building, training, and deploying custom ML models using TensorFlow,... | Amazon SageMaker | Azure Machine Learning | Vertex AI Custom Training |